The advent of new AI-based technologies including GenAI and LLMs (large language models) has no doubt resulted in big industry names pushing for continued work on everything AI. With that in mind, Microsoft recently announced its new Phi-3 family of open models. Unlike LLMs such as Gemini and ChatGPT though, the Phi-3 models are described by Microsoft as cost-effective small language models, aka SLMs.

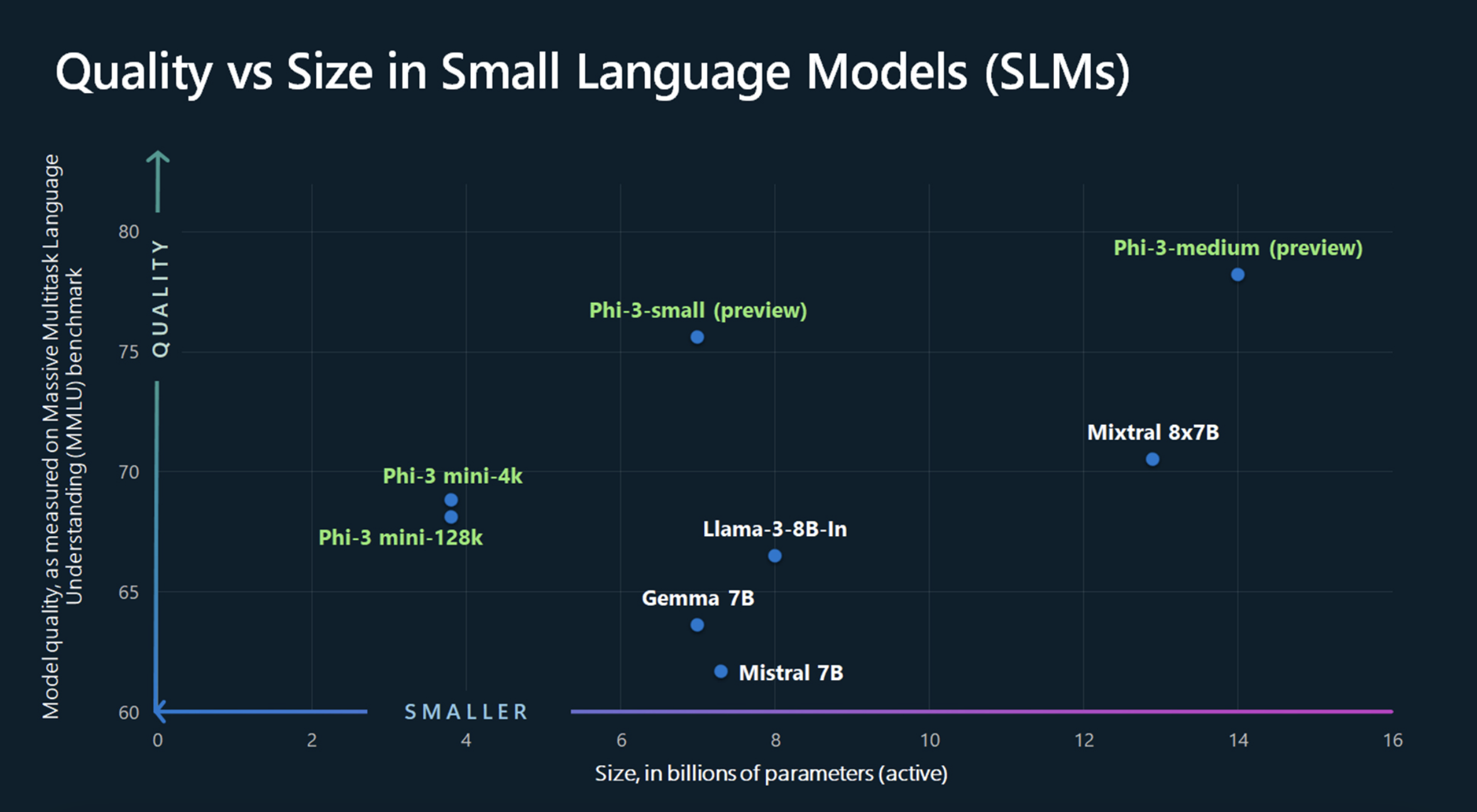

The company says that its new Phi-3 models outperform models of the same size and even the next size up in terms of different benchmarks covering language, coding and math capabilities. According to Microsoft, this is made possible via training innovations developed by the company’s researchers.

Additionally, Microsoft adds that it’s making the first Phi-3 model publicly available via Phi-3-mini, which measures at 3.8 billion parameters and is able to outperform models twice its size. Phi-3 will be available in the Microsoft Azure AI Model Catalog and on Hugging Face, a platform for machine learning models, as well as Ollama, a framework for running models on a local machine. It will also be available as an NVIDIA NIM microservice with a standard API interface.

Finally, additional models to the Phi-3 family will be made available soon to offer more choice across quality and cost – these include Phi-3-small (7 billion parameters) and Phi-3-medium (14 billion parameters), which will be available in the Azure AI Model Catalog and other model gardens later on.

Source: Microsoft